107x Filetype PDF File size 0.40 MB Source: www.mecs-press.org

I.J. Intelligent Systems and Applications, 2018, 11, 11-19

Published Online November 2018 in MECS (http://www.mecs-press.org/)

DOI: 10.5815/ijisa.2018.11.02

Medical Big Data Classification Using a

Combination of Random Forest Classifier and K-

Means Clustering

R. Saravana kumar

Professor, Department of computer science and Engineering, Dayananda Sagar Academy of Technology and

Management, Bangalore

E-mail:saravanaram0516@gmail.com

P. Manikandan

Professor, Computer Science and Engineering Department from Malla Reddy Engineering College for Women,

Maisammaguda, Secunderabad, Telangana

E-mail: parasumani001@gmail.com

Received: 19 February 2018; Accepted: 20 May 2018; Published: 08 November 2018

Abstract—An efficient classification algorithm used algorithms, such as decision trees, support vector

recently in many big data applications is the Random machine, Naive Bayes neutral network, and k Nearest

forest classifier algorithm. Large complex data include Neighbors (kNN), Differential Evolution(DE)[9]

patient record, medicine details, and staff data etc., algorithm, Machine Learning [10] algorithm, Big Data

comprises the medical big data. Such massive data is not Analytics(BDA)[11, 12].

easy to be classified and handled in an efficient manner. Fast finding the nearest samples and selecting

Because of less accuracy and there is a chance of data representative or eliminating certain samples are the two

deletion and also data missing using traditional methods traditional KNN methods utilized in big data. For training,

such as Linear Classifier K-Nearest Neighbor, Random the earlier version of SVM-KNN [13, 14] has to compute

Clustering K-Nearest Neighbor. Hence we adapt the the query distances which are comparatively slow.

Random Forest Classification using K-means clustering However in big data the computational complexity is

algorithm to overcome the complexity and accuracy issue. high. The task of partitioning a feature space into fuzzy

In this paper, at first the medical big data is partitioned classes is called the Fuzzy classification. In each region

into various clusters by utilizing k- means algorithm the feature space can be specified with fuzzy regions,

based upon some dimension. Then each cluster is which is maintained using fuzzy rules [15]. Feature

classified by utilizing random forest classifier algorithm subset selection and linear discriminate analysis are the

then it generating decision tree and it is classified based two methods used in neuro-fuzzy classifier. These

upon the specified criteria. When compared to the methods are used to evaluate the important feature

existing systems, the experimental results indicate that subsets. Hence for training the neuro-fuzzy classifier, the

the proposed algorithm increases the data accuracy. characteristics of the data distribution is restored in

feature space [16]. Usage of many fuzzy rules is the main

Index Terms—Decision trees, k-means clustering, drawback of this method. The fuzzy neural classifier

medical big data, random forest, Classification. algorithm resulted weak identification of data and hence

cost and time is increased.

In this paper, to classify the medical big data, we

I. INTRODUCTION propose a combined clustering and classification

Massive and complex data usually represented in technique. The proposed technique is the joined

18 execution of both the k-mean clustering method and RF

exabyte (10 bytes) are referred as big data. The large (Random Forest) classification method. Compared to

sensitive data is being used frequently in various other clustering algorithm the k-means clustering gives

organizations such as biomedical, IT, banking and so on. better performance. Since, it takes less time for

Using conventional database and other data analysis tools partitioning the high-dimensional datasets. The RF is an

such data is difficult to manage, standardize [1] and efficient learning method which is easy to interpret and

secure [2, 3]. Regarding their structure, storage and explain non-parametric. At first, the k-mean clustering

analysis, medical big data involve many issues. Several method is utilized to separate the high-dimensional

techniques are to be followed [4-8] to increase the medical data into various parts where each partition is

accuracy, cost reduction and improve the efficiency of considered as a cluster. The difference between each

big data. Big data uses the traditional classification cluster member and the mean of the cluster value is

Copyright © 2018 MECS I.J. Intelligent Systems and Applications, 2018, 11, 11-19

12 Medical Big Data Classification Using a Combination of Random Forest Classifier and K-Means Clustering

computed after the mean estimation of each cluster. Then, time and cost complexity was reduced and the QoS was

the clustered information is classified using the RF increased. Data security and privacy was the major

classification technique. To effectively recognize the limitation.

underrepresented class, this RF technique can oversee Zhang Yaoxue et al., [20] have presented the survey on

datasets as vast as required giving the vital support. By cloud computing and analyzed its related distributed

the proposed RF approach the big medical data will be computing technologies. To support the big data of IoT,

reduced accurately and efficiently. two promising computing paradigms were introduced

The rest of the paper is organized as follows. Section 2 they are transparent computing and fog computing. The

briefly reviews the related works. Section 3 elaborates the computational performance against big incoming data

training and testing process. The proposed technique requests was improved from multiple clients but there

achievement results and the related discussion are given was less sensitive data security.

in section 4 and the paper is concluded in section 5. A big data analytics-enabled business value was

introduced by Yichuan Wang and Nick Hajli [21]. All the

phases the information life cycle in big data architecture

II. RELATED WORKS was made understood by the concept of ILM. By

Gang Luo [17] have introduced a system named analyzing secondary data consisting of big data cases

Predict-ML for transformation of big clinical data into specifically in the healthcare context it also explores the

several datasets which was used in various applications. three path-to-value chains to reach big data analytics

The results were predicted automatically, in which the success. Thus to analyze big data for business

main advantage of the system is less time and reduced transformation it provides new methodology to healthcare

cost. The Predictive model can guide personalized practitioners and detailed investigation of big data

medicine and clinical decision making. The software analytics implementation was offered more. There was no

takes several years to be built fully and hence not easily proper method to retain and manage data efficiently,

affordable. though it has flexibility to deal with big data.

For more flexibility in dynamic data streams a new To reduce the challenges faced by big data in radiology

evolving interval type-2 fuzzy rule-based classifier and other healthcare organization P. Marcheschi [22]

(eT2Class) was presented by Mahardhika Pratama et al., implemented HL7 (High Level 7) CDA technique.

[18]. While retaining more compact and parsimonious Standard radiology was highly benefitted due to the

rule base on the state-of-art EFC’s that method produces presence of DICOM (Digital Image and Communication

more reliable classification rates. Referring to the in Medicine) and faster implementation can be done by

summarization and generalization power of data streams, the developers. The dissemination usage of FHIR

initial data stream was pruned and the fuzzy rules were standard simplifies developer works to make it less

grown automatically. Accuracy and reliability were abstract, for the process of document creation. The

accomplished in that technique. However the complexity implementation simplifies the presence of more templates

was prediction and management of big data. and for its simplicity and completeness it was hence

A KNN rule classifier based on GPU devices was proved to be more successful. Lack of “plug and play”

designed by P. Gutiérrez et al., [19] to overcome the solution that helps in the standardization of data was the

dependency between datasets and the GPU memory major drawback.

requirement. Using this method, an efficient CPU-GPU The devices that track real-time health data, or devices

communication was designed. Due to its Irrespective size that auto-administer therapies, devices that constantly

GPU keeps the memory usage stable and allows the monitor health indicator when a patient self-oversees a

dataset addressing significantly from hours to minutes in therapy, D. Dimitrov [23] initiated mIOT (medical

which the run time has been reduced. The design was best Internet of Things) and big data. In smart phones,

suitable in lazy learning algorithms such as KNN rule. wireless devices can be implemented and the time spends

The time complexity was reduced and also the run-time by the end users can be reduced by that method. Based

performance was improved. But for every training upon the symptoms it can be diagnosed. However it

datasets the nearest distance calculation was complex for cannot determine the exact health condition of users this

big data. method cannot be fully trusted. Accordingly

In order to reduce the complexity of big data a novel modifications were done by updating current data and

architecture was introduced by Entesar Althagafy and M. also it should be made user friendly.

Rizwan Jameel Qureshi [15]. To efficiently analyze, the

big data in IT companies includes large complex data’s III. PROPOSED METHOD

were difficult. To improve the performance of quality of

service (QoS) this system integrates the Amazon Web Training process and testing process are the two

Service (AWS) remote cloud, Eucalyptus, Hadoop. All processes being introduced in this section. In training

incoming requests were accepted by AWS remote cloud process, initially the medical big data is partitioned using

and to the best proper Eucalyptus cloud it is forwarded K-means clustering. Then randomly select the partitioned

intelligently. The complexity was overcome by this datasets and for each dataset, decision trees are generated.

architecture and the performance against incoming In testing process, each test sample is classified from the

request from multiple clients was enhanced and hence the values generated in decision trees.

Copyright © 2018 MECS I.J. Intelligent Systems and Applications, 2018, 11, 11-19

Medical Big Data Classification Using a Combination of Random Forest Classifier and K-Means Clustering 13

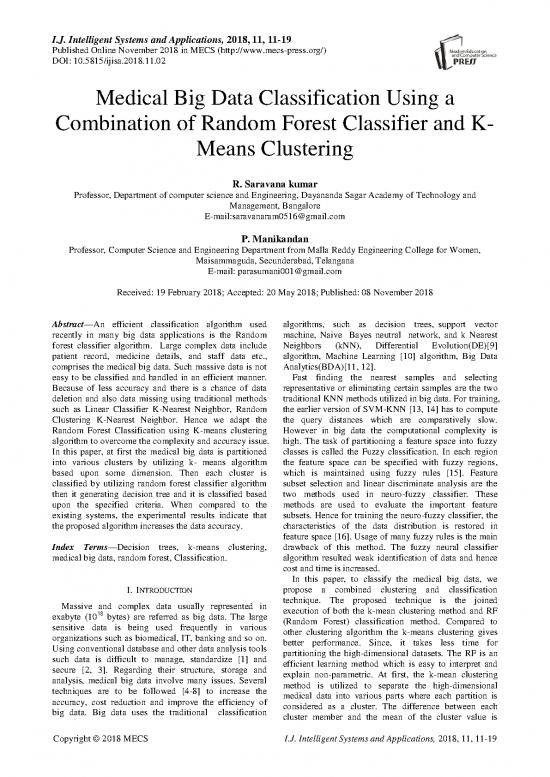

Medical Bigdata Training Process

K-means clustering

Training sample set

Randomization

Sample Sample Sample

subset 1 subset 2 subset n

Test sample Decision tree 1 Decision tree 2 Decision tree n

Class A Class B Class n

Majority Voting

Testing Process

Final Class

Fig.1. Random forest Classification using K -means Clustering

The classification of medical big data using random results are compared. Generally, for determining

forest is shown in fig. 1. By using k-means clustering the exact value of K there is no method, but using certain

medical big data is partitioned into number of groups methods an accurate estimate can be obtained. Across

named as clusters. Then, based on the RF classification different values of K , the mean distance between data

method the cluster data is classified and which are start points and their cluster centroid, the most commonly

with decision tree generation. For each split, random used method is to compare their results. Whenever

forest selects random subset of predictors. Even on the number of clusters is increased the distance to

smaller sample set sizes. Random selection further data points is reduced, and will always decrease this

reduces variance and hence the accuracy is increased. metric hence increasing K and when K is the same

Here, for each decision tree the random values are as the number of data points it goes to the extreme of

generated and finally the best class is selected based on reaching zero. K can also be determined using certain

majority voting. methods like Cross-validation, information criteria,

A. Training Process the information theoretic jump method, the silhouette

The medical big data contains massive and complex method, and the G-means algorithm and so on.

Across a group that provides insight into how the

data such as management information, staff details, data algorithm is splitting the data for each K the

and medicinal information. Random forest classifier is distribution of data points can be monitored. In the

used to classify such data accurately and efficiently. To data point the data set is a collection of features.

partition the big data at first training process clustering is Either randomly generated or selected from the data

done for selection of random subsets. The decision trees set. Initially the algorithm starts with selection of

are generated from the random subsets. cluster centroid. Between two steps the algorithm

B. K-means clustering then iterates:

Step 1: Data assignment: Each cluster from the

1) Choosing K : In K -means clustering to produce dataset is defined by cluster centroid. In data

the best result the repeated refinement is used. K is assignment step, each data point is assigned to the

given as input for the dataset and the number of centroid with the minimum distance based on the

clusters. The clusters are determined by the K means squared Euclidean distance. More formally, in set C

algorithm and for a particular pre-chosen K data set if ci is the collection of centroids, then each data

labels are determined. The number of clusters in the point x is assigned to a cluster based on

datasets for a range of K values is found using

the K -means clustering algorithm and hence the

Copyright © 2018 MECS I.J. Intelligent Systems and Applications, 2018, 11, 11-19

14 Medical Big Data Classification Using a Combination of Random Forest Classifier and K-Means Clustering

2 x st

arg min dist(c ,x) (1) Where fx1 is the feature of 1 sample, fyn is the

i

cC

i feature y of nth sample .Likewise Zth feature samples

Here, in equation 1, using Euclidean distance the are determined. The randomized training dataset is

minimum distance between dataset and the centroid depicted in equation 3.

is calculated. For each ithcluster centroid the set of Step 2: Next based upon the criteria the random data

data point assignments be S . subsets are created. These subsets are called as decision

i trees.

Step 2: Centroid updation: The cluster centroid are

recomputed. In equation 2, this is done by taking the Decision tree1

mean of all data points assigned to that centroid's

cluster. fx12 fy12 Z12

1 S1

(2) (4)

cx

ii

Si xS

ii

fx35 fy35 Z35

The two steps are repeated until no data points

change clusters. To converge a result this algorithm is Decision tree2,

guaranteed. The possible outcome is not produced by

fx2 fy2 Z2

the result, meaning that with randomized starting

centroids may give a best output assessing more than

S2

one run of the algorithm. (5)

C. Random Forest Algorithm

fx20 fy20 Z20

Input: Training Datasets, Test sample

Output: Majority vote from all individual trained trees Decision treet ,

after classification.

Let T be the number of trees to build. fx4 fy4 Z4

trees

For each of T iterations

trees

S2

(6)

1. From training set a new bootstrap sample is to be

fx12 fy12 Z12

selected.

2. On the bootstrap an unpruned tree is grown.

3. Randomly select m at each internal node Where, S S12S St

try Equation 4, 5, and 6 shows that S1 is the decision tree

predictors and using only these predictors 1, S2 is the decision tree 2, St is the decision tree t

determine the best split. respectively and hence from the given dataset, the

t decision trees are generated.

The datasets best split is chosen based upon the Step 3: The final decision values are evaluated from

regression and classification of data. Efficiently T is

trees the decision trees and using random forest all the values

selected by building trees until entire dataset is splitted. are compared with the test sample classifier and hence the

For better split, m . is the number of predictions and it is

try majority vote is determined. In the required class label

randomly selected from the dataset. Where, m =k the majority vote thus obtained is considered.

try

bagging is a special case in random forest. Many benefits D. Testing Process

of decision trees such as handling missing values, Whether the decision is true or false is predicted

continuous and categorical prediction are retained by initially by two types of values. If the set contain samples

Random forest. The forest model is built initially. To of different pattern, the entire data set is redefined into

make predictions we use the forest. In general the random single pattern subsamples. Belonging to a single pattern,

forest can be specified as follows. the decision tree is composed by a leaf when the set

Step 1: At first the dataset is to be created as, contains only samples. By purity measure of each node

the feature selection is improved. As in equation 7 the

fx1 fy1 Z1

general dataset can be converted into decision trees.

S

(3) M

F(x)F(x,a)F yT(x) c I xR (7)

m

m

fxn fyn Zn m1

Copyright © 2018 MECS I.J. Intelligent Systems and Applications, 2018, 11, 11-19

no reviews yet

Please Login to review.