242x Filetype PDF File size 0.33 MB Source: users.soe.ucsc.edu

TheHitchhiker’s Guide to Cross-Platform OpenCL

Application Development

Tyler Sorensen Alastair F. Donaldson

Imperial College London Imperial College London

t.sorensen15@imperial.ac.uk alastair.donaldson@imperial.ac.uk

ABSTRACT % of papers that evaluate OpenCL

One of the benefits to programming of OpenCL is plat- implementations from Number of papers that evaluate an

form portability. That is, an OpenCL program that fol- 1, 2, and 3 GPU vendors OpenCL GPU implementation from

each vendor

lows the OpenCL specification should, in principle, execute 6% 39

reliably on any platform that supports OpenCL. To assess (3)

the current state of OpenCL portability, we provide an ex-

perience report examining two sets of open source bench- 36% 23

marksthatweattemptedtoexecuteacrossavarietyofGPU (18) 58%

platforms, via OpenCL. We report on the portability issues (29) 8

we encountered, where applications would execute success- 3 1

fully on one platform but fail on another. We classify issues

into three groups: (1) framework bugs, where the vendor-

provided OpenCL framework fails; (2) specification limita- 1 2 3

tions, where the OpenCL specification is unclear and where

different GPU platforms exhibit different behaviours; and

(3) programming bugs, where non-portability arises due to

the program exercising behaviours that are incorrect or un- Figure 1: The number of vendors whose OpenCL

defined according to the OpenCL specification. The issues GPUimplementationsareevaluatedin50recentpa-

we encountered slowed the development process associated pers listed at http://hgpu.org

with our sets of applications, but we view the issues as pro-

viding exciting motivation for future testing and verification

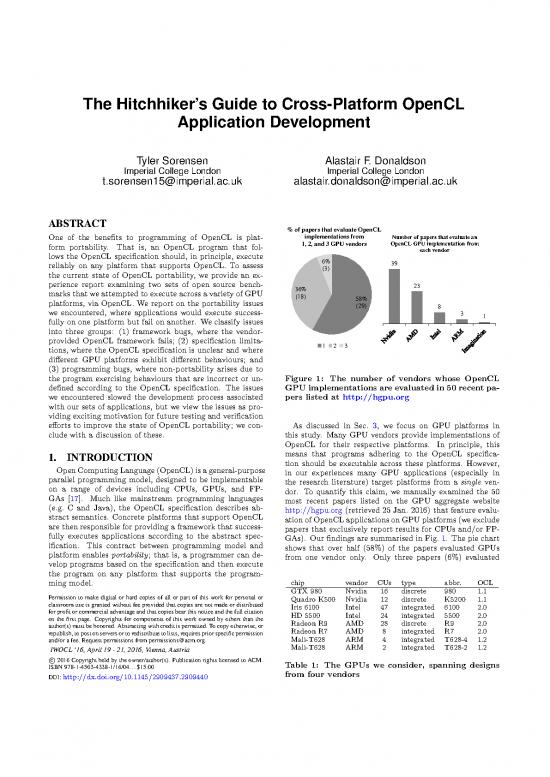

efforts to improve the state of OpenCL portability; we con- As discussed in Sec. 3, we focus on GPU platforms in

clude with a discussion of these. this study. Many GPU vendors provide implementations of

OpenCL for their respective platforms. In principle, this

1. INTRODUCTION means that programs adhering to the OpenCL specifica-

tion should be executable across these platforms. However,

OpenComputingLanguage(OpenCL)isageneral-purpose in our experiences many GPU applications (especially in

parallel programming model, designed to be implementable the research literature) target platforms from a single ven-

on a range of devices including CPUs, GPUs, and FP- dor. To quantify this claim, we manually examined the 50

GAs [17]. Much like mainstream programming languages most recent papers listed on the GPU aggregate website

(e.g. C and Java), the OpenCL specification describes ab- http://hgpu.org (retrieved 25 Jan. 2016) that feature evalu-

stract semantics. Concrete platforms that support OpenCL ation of OpenCLapplicationsonGPUplatforms(weexclude

are then responsible for providing a framework that success- papers that exclusively report results for CPUs and/or FP-

fully executes applications according to the abstract spec- GAs). Our findings are summarised in Fig. 1. The pie chart

ification. This contract between programming model and shows that over half (58%) of the papers evaluated GPUs

platform enables portability; that is, a programmer can de- from one vendor only. Only three papers (6%) evaluated

velop programs based on the specification and then execute

the program on any platform that supports the program-

ming model. chip vendor CUs type abbr. OCL

GTX980 Nvidia 16 discrete 980 1.1

Permission to make digital or hard copies of all or part of this work for personal or Quadro K500 Nvidia 12 discrete K5200 1.1

classroom use is granted without fee provided that copies are not made or distributed Iris 6100 Intel 47 integrated 6100 2.0

for profit or commercial advantage and that copies bear this notice and the full citation HD5500 Intel 24 integrated 5500 2.0

on the first page. Copyrights for components of this work owned by others than the Radeon R9 AMD 28 discrete R9 2.0

author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or

republish, to post on servers or to redistribute to lists, requires prior specific permission Radeon R7 AMD 8 integrated R7 2.0

and/or a fee. Request permissions from permissions@acm.org. Mali-T628 ARM 4 integrated T628-4 1.2

IWOCL’16,April19-21,2016,Vienna,Austria Mali-T628 ARM 2 integrated T628-2 1.2

c

2016Copyright held by the owner/author(s). Publication rights licensed to ACM. Table 1: The GPUs we consider, spanning designs

ISBN978-1-4503-4338-1/16/04...$15.00

DOI:http://dx.doi.org/10.1145/2909437.2909440 from four vendors

benchmark app. name description GPUarchitecture source language

Pannotia p-sssp single source shortest path AMDRadeonHD7000 OpenCL 1.0

Pannotia p-mis maximal independent set AMDRadeonHD7000 OpenCL 1.0

Pannotia p-colour graph colouring AMDRadeonHD7000 OpenCL 1.0

Pannotia p-bc betweenness centrality AMDRadeonHD7000 OpenCL 1.0

Lonestar ls-mst minimum spanning tree Nvidia Kepler and Fermi CUDA7

Lonestar ls-dmr delaunay mesh refinement Nvidia Kepler and Fermi CUDA7

Lonestar ls-bfs breadth first search Nvidia Kepler and Fermi CUDA7

Lonestar ls-sssp single source shortest path Nvidia Kepler and Fermi CUDA7

Table 2: The applications we consider

on GPUs from three vendors, and no paper presented ex- • Program bugs, where the original program contains

periments from more than three vendors. The figure also a bug that we observe to be dormant when the pro-

shows a histogram counting the number of papers that con- gram is executed on the originally-targeted platform,

ducted evaluation on a GPU from each vendor. Nvidia and but which appears when the program is executed on

AMDare by far the most popular, even though other ma- different platforms.

jor vendors (e.g. ARM, Imagination, Qualcomm) all provide

OpenCLsupportfortheirGPUs. Ourinvestigationsuggests Several recent works have raised reliability concerns in re-

that insufficient effort has been put into assessing the guar- lation to GPU programming. Compiler fuzzing has revealed

antees of portability that OpenCL aims to provide. many bugs in OpenCL compilers [19], targeted litmus tests

In this paper, we discuss our experiences with porting and have shown surprising hardware behaviours with respect to

running several open source applications across eight GPUs relaxed memory [1], and program analysis tools for OpenCL

spanning four vendors, detailed in Tab. 1. For each chip we have revealed correctness issues, such as data races, when

give the full GPU name, vendor, number of compute units used to scrutinise open source benchmark suites [3, 10]. In

(CUs), specify whether the GPU is discrete or integrated, contrast to this prior work, which specifically set out to ex-

provide a short name that we use throughout the paper for posebugs, either through engineered synthetic programs [19,

brevity, and indicate which version of OpenCL the GPU sup- 1], or by searching for defects that might arise under rare

ports (OCL).AsTab.1shows,weconsiderGPUsofdifferent conditions [3, 10], we report here on portability issues that

sizes (based on number of compute units), and consider both we encountered “in the wild”. These issues arose without

integrated and discrete chips. We also attempt to diversify provocation when attempting to run open source applica-

the intra-vendor chips. For Nvidia the 980 and K5200 are tions. In fact, as discussed further in Section 3, the porting

from different Nvidia architectures (Maxwell and Kepler, re- effort that led to this study was undertaken as part of a sep-

spectively). For Intel the 6100 is part of the higher end Iris arate, ongoing research project; to make progress on that re-

product line, while the 5500 is part of the consumer HD se- search project we were hoping that we would not encounter

ries. The applications we consider (which are summarised in such issues. We believe that the “real-world” nature of the

Tab. 2) are taken from two benchmark suites, Pannotia [9] issues experienced may be closer to what GPU application

and Lonestar [8]. For each application we give the bench- developers encounter day-to-day, compared with the issues

mark suite it is associated with, a short description, the exposed by targeted testing and formal verification.

GPUarchitecture family the application was evaluated on, Ourhopeisthatthisreportwillmakethefollowingcontri-

and the original source language of the application. We de- butions to the OpenCL community. For software engineers

scribe the benchmark suites and our motivation for choosing endeavouring to develop portable OpenCL applications, it

these applications in more detail in Sec. 3. can serve as hazard map for issues to be aware of, and sug-

This report serves to assess the current state of portability gestions for working around such issues. For vendors, it can

for OpenCL applications across a range of GPUs, by detail- serve to identify areas in OpenCL frameworks that would

ing the issues that blocked portability of the applications benefit from more robust examination and testing. For re-

we studied. In this work, we consider semantic portability searchers, the issues we report on may serve as motivational

rather than performance portability; that is, the issues we case-studies for new verification and testing methods.

document deal with the functional behaviour of applications Despite the challenges we faced, in most cases we were

rather than runtime performance. Prior work has exam- able to find a work-around, and overall we consider our ex-

ined and addressed the issue of performance portability for perience a success: OpenCL application portability can be

OpenCLprogramsonCPUsandGPUs(forexample[25,26, achieved with effort, and this effort will diminish as vendor

2]); however, we encountered these issues when simply at- implementations improve, aspects of the specification are

tempting to run the applications across GPUs, without any clarified, and better analysis tools become available.

attempt to optimise runtime per platform. We report on The structure of the paper is as follows: Sec. 2 contains

these semantic portability issues in detail, classifying them an overview of OpenCL and common elements of a GPU

into three main categories: OpenCL framework. The applications we ported are de-

• Framework bugs, where a vendor-provided OpenCL scribed in Sec. 3. Section 4 documents the issues we classi-

implementation behaves incorrectly according to the fied as framework bugs. Section 5 documents the issues we

OpenCL specification. classified as specification limitations. Section 6 documents

the issues we classified as programming bugs. We then sug-

• Specification limitations, where the OpenCL speci- gest ways that we believe the state of portability of OpenCL

fication is unclear and where different GPU implemen- GPUprogramscouldbeimprovedinSec.7. Finally, wecon-

tations exhibit different behaviours. clude in Sec. 8.

2. BACKGROUNDONOPENCL Components of an OpenCL Environment. To enable

OpenCL Programming. An OpenCL application con- OpenCL support for a given device, a vendor must provide

sists of two parts: host code, usually executed on a CPU, a compiler for OpenCL C that targets the instruction set of

and device code, which is executed on an accelerator de- the device, and a runtime capable of coordinating interac-

vice; in this paper we consider GPU accelerators. The host tion between the host and the specific device. It is the role

code is usually written in C or C++ (although wrappers of the OpenCL specification to define requirements that the

for other languages now exist) and is compiled using a stan- compiler and runtime must adhere to in order to successfully

dard C/C++ compiler (e.g. gcc or MSVC). The OpenCL execute valid applications. It is the vendor’s job to ensure

framework is accessed through library calls that allow for that these requirements are met in practice, and clarity in

the set-up and execution of a supported device. The API for the OpenCL specification is essential to achieving this.

the OpenCLlibrary is documented in the OpenCL specifica- The device, compiler and runtime comprise a complete

tion [17], and it is up to the vendor to provide a conforming OpenCL environment. Issues in any one of these compo-

implementation that the host code can link to. nents can cause the contract between the OpenCL specifi-

The device code is written in OpenCL C [14] (similar to cation and the vendor-provided environment to be violated.

C99). The code is written in an SIMT (single instruction

multiple thread) manner, such that all threads execute the

samecode, but have access to unique thread identifiers. The 3. EVALUATEDAPPLICATIONS

device code must contain one or more entry functions where This experience report is a by-product of an ongoing

execution begins; these functions are called kernels. project that explores using the OpenCL 2.0 relaxed memory

OpenCL supports a hierarchical execution model that model [17, pp. 35-53] to design custom synchronisation con-

mirrorsfeaturescommontosomeofthespecialisedhardware structs for GPUs. For that project, we sought benchmarks

that OpenCL kernels are expected to execute on, in partic- that might benefit from the use of fine-grained communica-

ular features common to many GPU architectures. Threads tion idioms. We discovered that applications containing ir-

are partitioned into disjoint, equally-sized sets called work- regular parallelism over dynamic workloads provided a good

groups. ThreadswithinthesameworkgroupcanuseOpenCL fit for our goals. With this in mind, we found two suites of

primitives for efficient communication. For example, each open source benchmarks to experiment with: Pannotia [9]

workgrouphasadisjointregionoflocal memory; onlythreads andLonestar[8]. TheapplicationsaresummarisedinTab.2.

in the same workgroup can communicate using local mem- The short names of Pannotia and Lonestar applications are

ory. OpenCL also provides an intra-workgroup execution prefixed with“p”and“l”, respectively.

barrier. Onreachingabarrierathreadwaitsuntilallthreads The Pannotia benchmarks were originally developed to

in its workgroup have reached the barrier. Barriers can examinefine-grainedperformancecharacteristicsofirregular

be used for deterministic communication. To aid in finer- parallelism on GPUs, suchascachehitrateanddatatransfer

grained and intra-device communication, OpenCL provides time. The benchmarks were written in OpenCL 1.0, and

a set of atomic read-modify-write instructions where threads evaluated using AMD GPUs. There are six applications in

can atomically access, modify and store a value to memory. the benchmark suite in total, of which we consider four.

All device threads have access to a region of global memory. The two applications we did not consider were structured in

Newer GPUs provide support for the OpenCL 2.0 mem- a way such that we could not easily see how to apply our

ory model [17, pp. 35-53], which is similar to the C++11 experimental custom synchronisation constructs (recall that

memory model [13, pp. 1112-1129]. In this model, synchro- applying these constructs was what motivated us to evaluate

nisation memory locations must be declared with special these benchmarks across GPUs from a range of vendors).

atomic types (e.g. atomic_int). Accesses to these memory The Lonestar applications were originally written in

locations can be annotated with a memory order indicating CUDAand evaluated using Nvidia GPUs; we ported these

the extent to which the access will synchronise with other applications to OpenCL. Like the Pannotia applications, the

accesses (e.g. release, acquire), and a scope in the OpenCL Lonestar applications measure various performance charac-

hierarchy to indicate with which other threads in the concur- teristics of irregular applications, including control flow di-

rency hierarchy the access should communicate (e.g. a scope vergence between threads.

can be intra-workgroup or inter-workgroup). If no memory The Lonestar applications use non-portable, Nvidia-

order is provided, a default memory order of sequentially specific constructs, including single dimensional texture

consistent is used [14, p. 103]. Rules on the orderings pro- memory, warp-aware operations (e.g. warp shuffle com-

vided by these annotations are given both in the standard mands), and a device-level barrier. For each, we attempted

and (more formally) in recent academic work [5]. to provide portable OpenCL alternatives, changing texture

While support in OpenCL 2.0 facilitates finer-grained in- memory to global memory, rewriting warp-aware idioms to

teractions between the host and device, traditionally the use workgroup synchronisation, and using the OpenCL 2.0

host and device interact at a course level of granularity, and memory model to write a device-level barrier. There are

this is the case for the applications we consider in this pa- seven applications in the Lonestar benchmark suite, of which

per. Thehostanddevicedonotshareamemoryregion,thus we consider four. Similar to the Pannotia benchmarks, the

the host must explicitly transfer any input data the kernel three applications we did not consider were structured in a

needs to the device through the OpenCL API. The host is way that we could not easily see how to apply our custom

responsible for then setting the kernel arguments and finally synchronisation constructs.

launching the kernel, again all using the OpenCL API. Both benchmark suites contain an sssp application, how-

Asimilar language for programming GPUs is CUDA [21]. ever they are fundamentally different. The Lonestar version

This language is Nvidia-specific and thus not portable across (ls-sssp) uses shared task queues to manage the dynamic

GPUvendors. workload. The Pannotia version (p-sssp) is implemented

by iterating over common linear algebra methods. We thus Framework bug 2: deadlock with break-terminating

consider them as two distinct applications. loops

Summary: Loops without bounds (using break state-

4. FRAMEWORKBUGS ments to exit) lead to kernel deadlock

Here we outline three issues that we believe, to the best of Platforms: K5200 (Nvidia), R7, R9 (AMD)

our knowledge and debugging efforts, to be framework bugs. Status: Unreported

We experienced these issues when experimenting with cus- Workaround: Re-write loop as a for loop with an over-

tomsynchronisation constructs in the applications of Tab. 2 approximated iteration bound

across the chips of Tab. 1. Label: FB-BTL

For each bug, we give a brief summary that includes a When experimenting with the Pannotia benchmarks, we

short description of the bug, the platforms on which we ob- found it natural to write the applications using an un-

served the bug, the status of the bug (indicating whether we bounded loop which breaks when a terminating condition

have reported the issue and if so whether it is under investi- is met (e.g. when there is no more work to process). The

gation) and, if applicable, a work-around. We additionally following code snippet illustrates this idiom:

give each issue a label for ease of reference in the text.

After the summary, we elaborate more about how we came 1 while (1) {

across the issue and our debugging attempts. Where we have 2 terminating_condition = true;

not reported the issues, this is due to exposure of the issue 3

4 // do computation, setting terminating_condition

requiring use of our custom synchronisation constructs, the 5 // to false if there is more work to do

fruits of an ongoing and as-yet-unpublished project. Once 6

we publish these constructs, we will report the issues. 7 if (terminating_condition) {

8 break;

9 }

Framework bug 1: compiler crash 10 }

Summary: TheOpenCLkernelcompilercrashesnonde- OnK5200, R7 and R9, we discovered that this idiom can

terministically. deterministically cause non-termination of the kernel. Our

Platforms: 5500 and 6100 (Intel) debugging attempts led us to substitute the infinite loop

Status: Unreported with a finite loop with large bounds (keeping the break

Workaround: Addpreprocessordirectives to reduce the statements). We began with a loop bound of INT_MAX. After

number of kernels passed to the compiler this change, the applications correctly terminated. To de-

Label: FB-CC termine if threads were actually executing the loop INT_MAX

times, we tracked how many times each of the threads ex-

We encountered this error when experimenting with cus- ecuted the loop. We observed that no thread actually exe-

tom synchronisation constructs in the p-sssp application. cuted the loop for INT_MAX iterations. That is, each thread

Theoriginal application contained four kernel functions. Us- terminated early through the break statement.

ing our synchronisation construct, we implemented three Given this, we believe that the non-termination in the

newkernel functions, each of which performed some or all of original code with the infinite loop is due to a framework

the original computation using different approaches (e.g. by bug (e.g. a compiler bug). The work-around is to replace

varying the number and location of synchronisation opera- while(1) loop header with a for loop header that uses a

tions). For convenience, we located all seven kernel functions large over-approximation of the number of iterations of the

in a single source file. loop that will actually be executed.

We noticed that when we executed scripts to benchmark As with FB-CC, we did not report the issue yet because

the different kernels, the application would crash roughly this example uses our currently unpublished synchronisation

one in ten times with an unknown error, producing an out- constructs. While we do not believe that the issue is related

put that looks like a memory dump. Our debugging ef- specifically to the new synchronisation constructs, it does

forts showed that the application was crashing when the seem that a suitably complex kernel is required to cause this

OpenCLCcompilerwasinvokedviathe OpenCLAPIfunc- behaviour; our attempts to reduce the issue to a significantly

tion clBuildProgram. smaller example caused the problem to disappear.

In an attempt to find the root cause of this issue, we tried Framework bug 3: defunct processes

to reduce the size of the OpenCL source file. We were able

to reduce the problem to a kernel file that contained only Summary: GPU applications become defunct and un-

two large kernel functions. At this point, when either of the responsive when run with a Linux host

kernel functions were removed, the error disappeared. Our Platforms: R7 and R9 (AMD)

hypothesis is that the error is due to the OpenCL kernel file Status: Known

containing multiple large kernel functions. We were able to Workaround: Change host OS to Windows

work around this issue by surrounding the kernel functions Label: FB-DP

in the kernel file with preprocessor conditionals. We then

used the -D compiler flag to exclude all kernels except the In experimenting with new synchronisation constructs in

one we were currently benchmarking. the Pannotia applications we generated kernels that could

We have not yet reported this issue as the kernels which potentially have high runtimes (around 30 seconds). Most

cause the compiler to crash contain our custom synchroni- systems we experimented with employed a GPU watchdog

sation constructs. daemon (see Sec. 5) which catches and terminates kernels

no reviews yet

Please Login to review.